Accelerating Superconductivity Discovery through Deep Learning

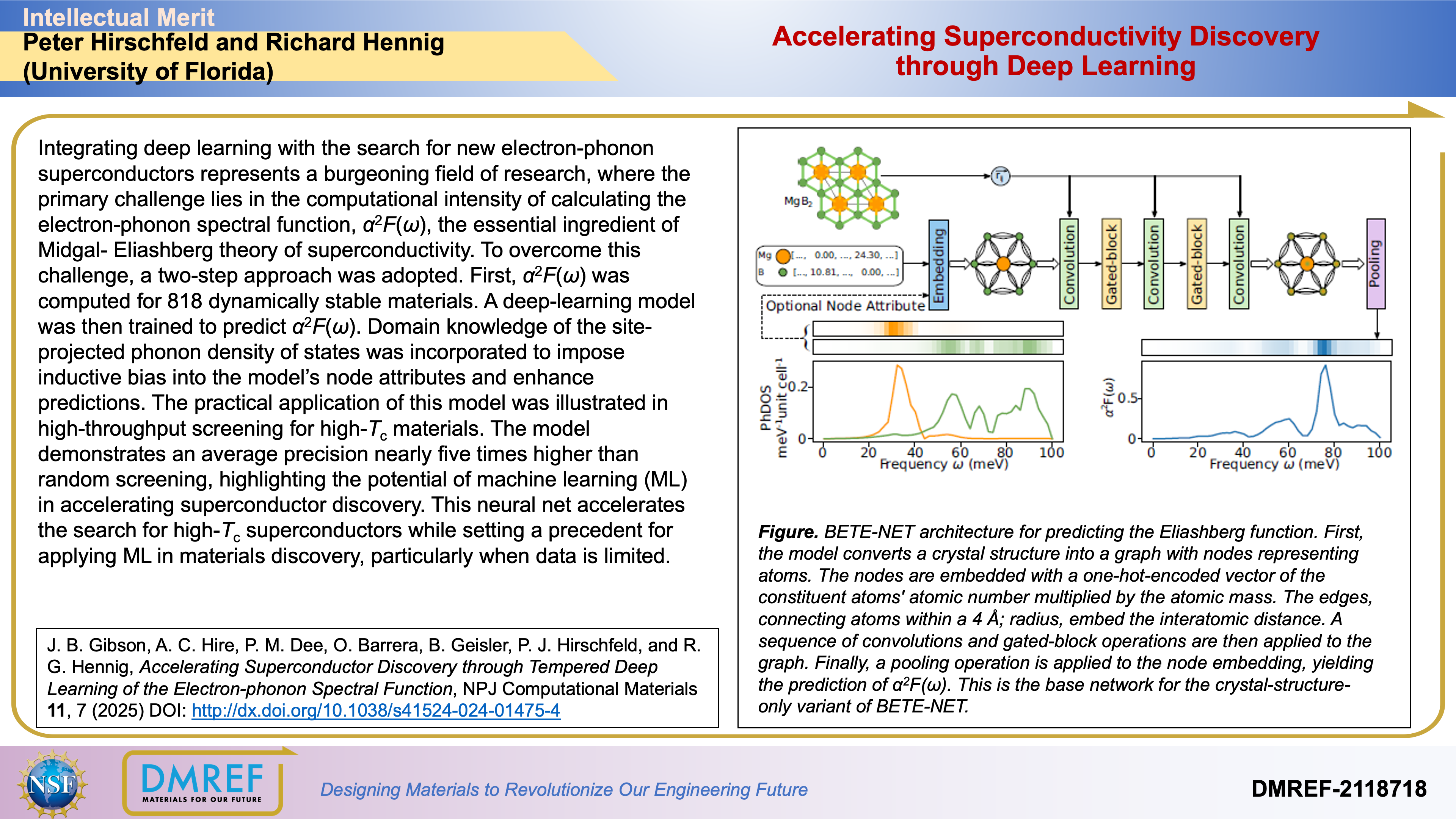

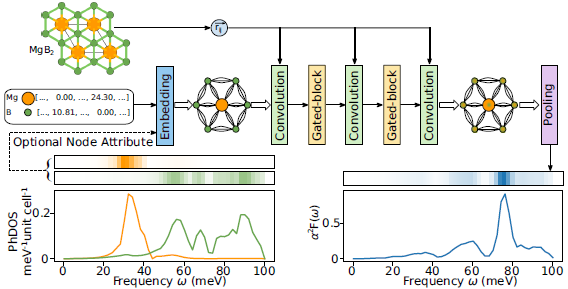

Integrating deep learning with the search for new electron-phonon superconductors represents a burgeoning field of research, where the primary challenge lies in the computational intensity of calculating the electron-phonon spectral function, α2F(ω), the essential ingredient of Midgal- Eliashbergtheory of superconductivity. To overcome this challenge, a two-step approach was adopted. First, α2F(ω) was computed for 818 dynamically stable materials. A deep-learning model was then trained to predict α2F(ω).

Domain knowledge of the site-projected phonon density of states was incorporated to impose inductive bias into the model’s node attributes and enhance predictions. The practical application of this model was illustrated in high-throughput screening for high-Tc materials. The model demonstrates an average precision nearly five times higher than random screening, highlighting the potential of machine learning (ML) in accelerating superconductor discovery. This neural net accelerates the search for high-Tc superconductors while setting a precedent for applying ML in materials discovery, particularly when data is limited.